Goblin

A proof of concept tool for structuring unstructured data in offensive security using LLMs and Obsidian

Today I am releasing a proof of concept tool, goblin, to address the issue of (un)structured data in offensive security. Goblin is an LLM-powered command line tool that integrates with LM Studio and Obsidian (through a new MCP plugin) that allows users to create yaml representations of an engagement and utilize remote or local models to map arbitrary tool output (think raw Certify or seatbelt output) to match that schema.

Background

Offensive security faces an uphill battle with unstructured data, as security tools generally output raw text with no industry-wide standardization. Since the main consumers are human, some formatting makes key bits of information easier for the eye to parse. However, this data format is quite noisy (i.e., containing lots of irrelevant data alongside the key information) and, compared to structured formats like JSON, YAML, or CSVs, much more difficult for machines to parse.

The Nuance of the Context Window

Click to read me!

When applied to LLMs, structured data is actually a bit of a tricky question. At the end of the day, most tool will output readable text meant for humans. Luckily, this is the same data format LLMs operate on! Meaning, LLMs are perfectly capable at understanding output from any given tool.

In reality, understanding this text becomes a bit cumbersome when we face issues such as the context window.

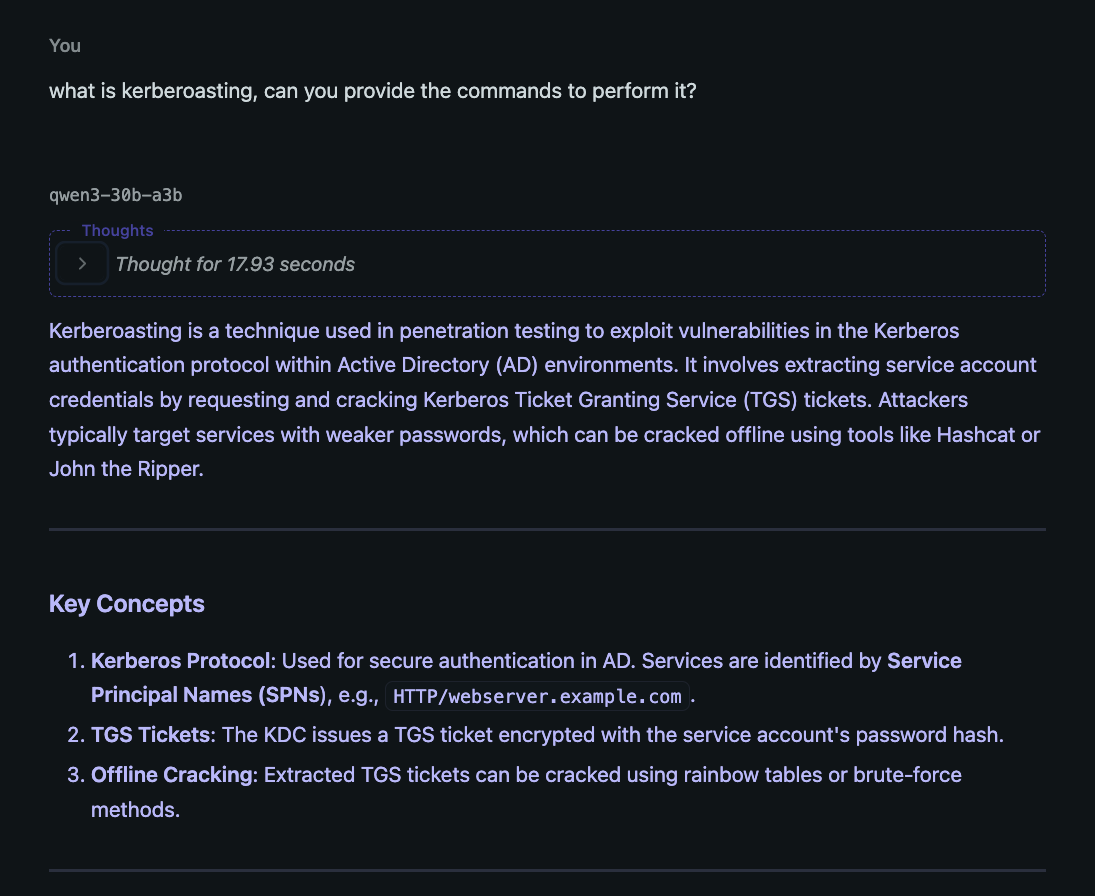

There are two main ways models can “know” information: their learned knowledge in the weights themselves (latent space) and the data that is provided to them at runtime in the context window (context space). The knowledge stored in the weights is a bit more unclear to the model whereas the data provided directly in context is sharp and clear. For example, when applied to offensive security, when prompted without context, a model may have a general understanding of what kerberoasting is but will often fumble through explanations or concrete generation of syntax for using rubeus or a similar tool, as seen in the example below.

Alternatively, if given some awareness of the usage of

Alternatively, if given some awareness of the usage of rubeus, say your internal methodology page, the model is highly effective at providing useful and relevant rubeus commands. Generally speaking, this is the theory behind systems like retrieval augmented generation (RAG)!

Now that we know models are very smart at attending to the data that is in their context window, we could imagine if you took the raw tool output from a variety of tools, say nmap, certify, and seatbelt and asked a decent model, “based on the tool output I’ve provided, what machines are present and what vulnerabilities do they have?” its fairly reasonable to assume a model could correlate the IP addresses, hostnames, domain names, etc. and give a coherent answer to exactly what machines have what vulnerabilities. The issue is addressing this problem at scale.

Current models have a variety of context lengths. Let’s take a look at how this has evolved over time:

| Model Name | Year | Context Length | Analogy |

|---|---|---|---|

| GPT-3 | 2020 | 2,048 tokens | Roughly 8 pages |

| GPT-4 | 2023 | 32,768 tokens | 1–2 chapters of a novel |

| GPT-4o Mini | 2024 | 128,000 tokens | Equivalent to reading the entire The Hobbit novel. |

| GPT-4o | 2024 | 128,000 tokens | Comparable to processing the full script of The Lord of the Rings: The Fellowship of the Ring movie. |

| Gemini 2.5 Flash | 2025 | 1,000,000 tokens | Similar to analyzing the complete works of Shakespeare. |

| Claude 3.7 Sonnet | 2025 | 200,000 tokens | Like reviewing all articles from a month’s worth of The New York Times. |

| Qwen3 30B A3B | 2025 | 128,000 tokens | Roughly the content of the entire Game of Thrones Season 1 scripts. |

| LLaMA 4 Scout | 2025 | 10,000,000 tokens | Comparable to studying the entire English Wikipedia. |

The key takeaway from this table is context lengths have absolutely exploded over the past few years. Current (May 2025) SOTA models have the ability to hold gigantic corpuses of data in their memory, capped off by the recent Llama 4 release claiming to have a context length of over 10M tokens. From what I’ve discussed so far, it seems entirely plausible one could shove all the commands, their output, documents, and notes from an entire operation into a single chat history and have the model generate reasonable insights. Although this might be a valid approach in the future (in machine learning, the simplest solution is often the best. See: The Bitter Lesson), in practice this is ineffective due to a variety of constraints we will see later.

In short, the context window is growing and with longer context we can get the model to make more tailored results, however, we just aren’t there yet to shove everything into context and be happy. Some creativity and engineering need to take place to fill in the gaps.

The Cool Stuff

To solve this issue, I introduce goblin, a proof-of-concept system for structuring your unstructured data! Let’s dig into some of the core features of how goblin works.

Custom Data Schemas

The core idea behind a system like this is to allow the user to decide how they want to format their data. In my development, I made a simplified example of how an internal pentest or red team engagement might be structured. For example, each engagement would have the following elements:

Client

└── Domain(s)

├── Machines

└── Users

Each of these objects has their own attributes (e.g. a machine could have a purpose, or os, or hashes attribute).

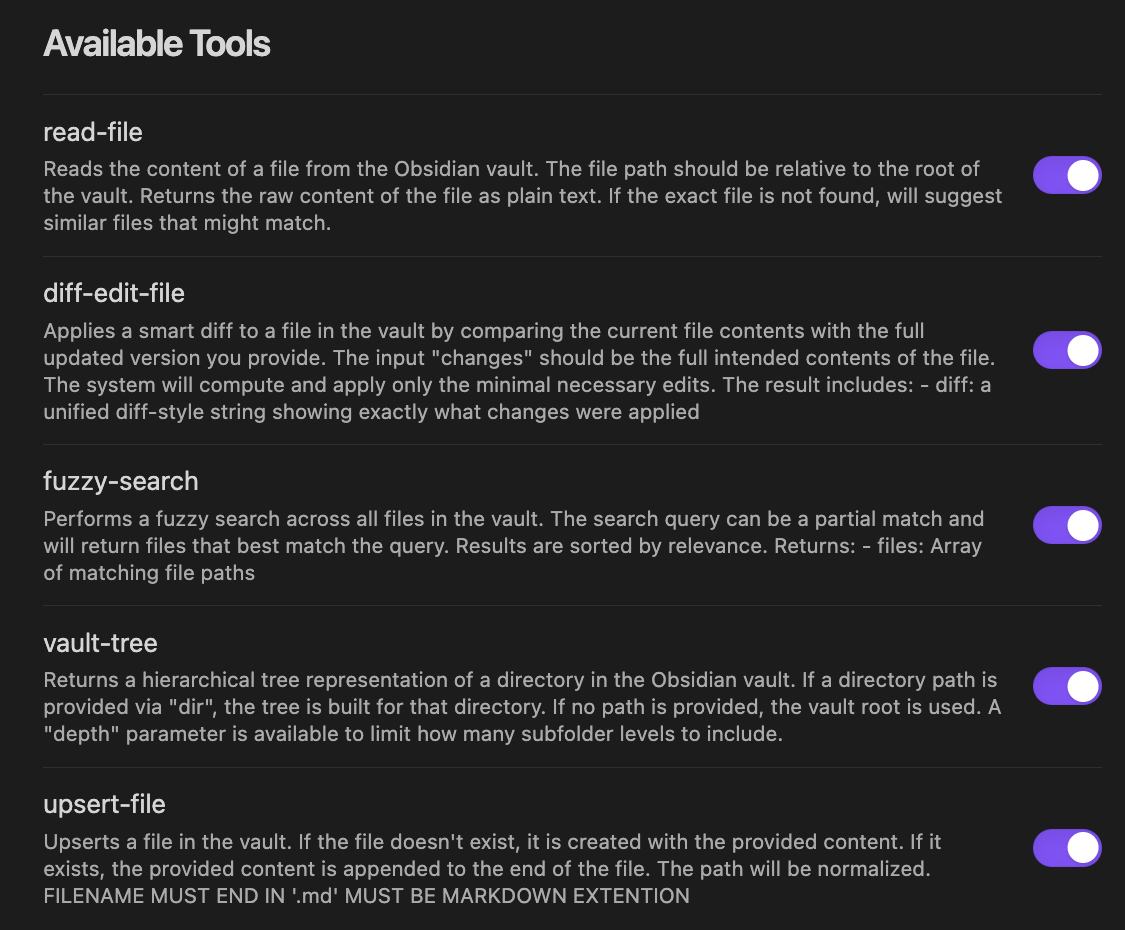

In terms of implementation, I worked with @jlevere to implement a custom (and the best available) Obsidian MCP server with a variety of tools for your agents to interact with an Obsidian vault. In addition to predefined tools such as read-file or fuzzy-search, the server will automatically generate custom tools based on the user defined schemas.

Here we can see the list of stock available tools on the server:

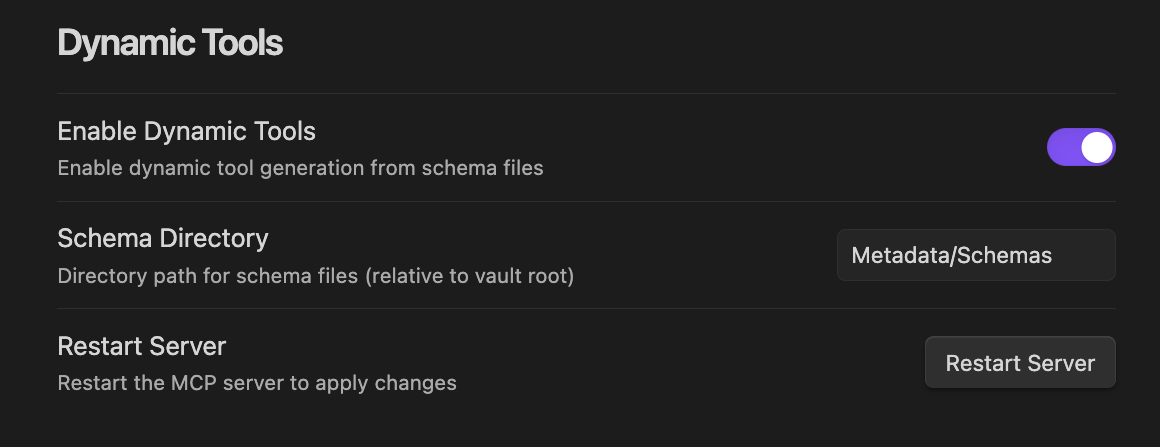

However, you can also enable dynamic tools:

However, you can also enable dynamic tools:

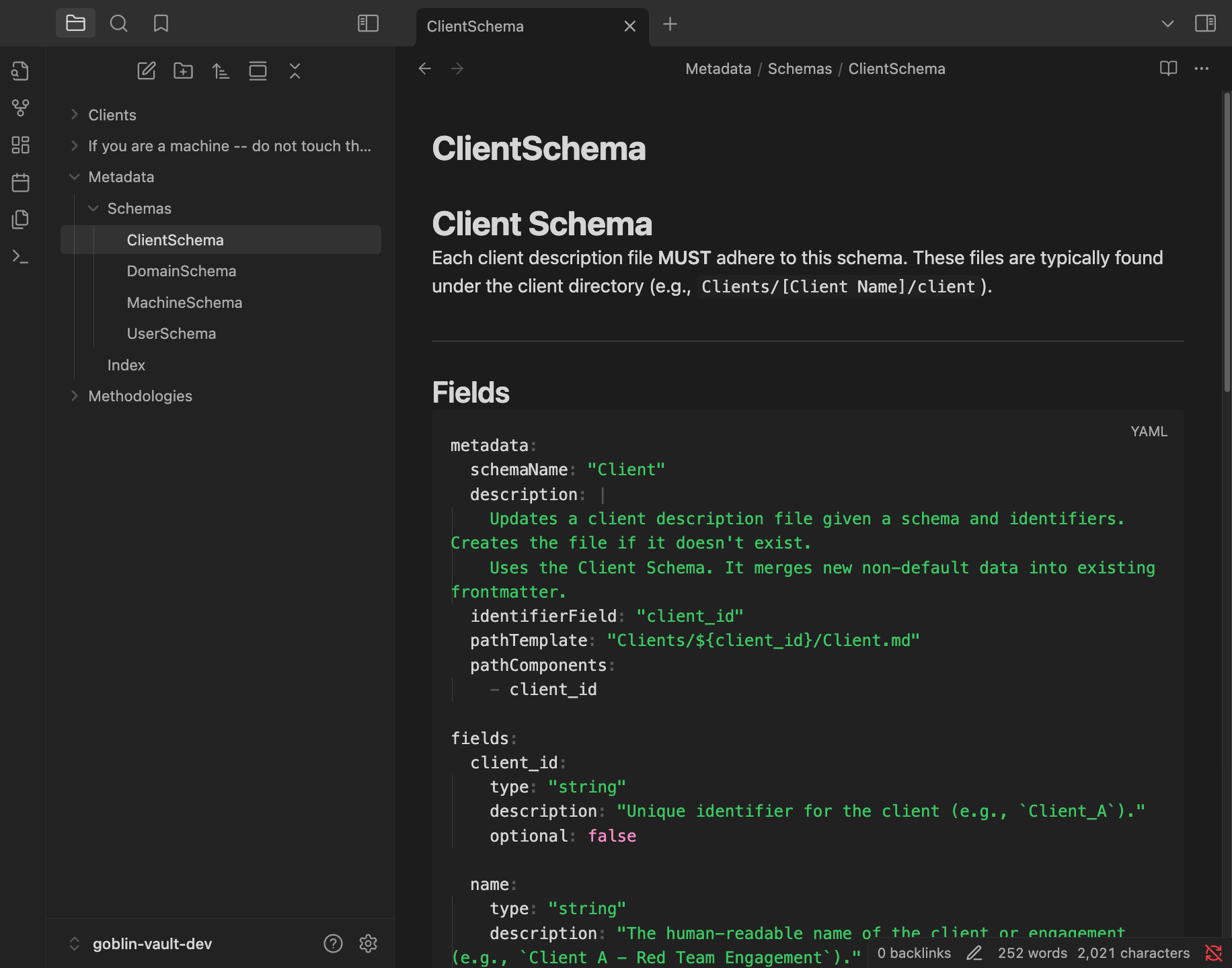

The server will then parse your provided schema directory for any schema definitions as can be seen here:

The server will then parse your provided schema directory for any schema definitions as can be seen here:

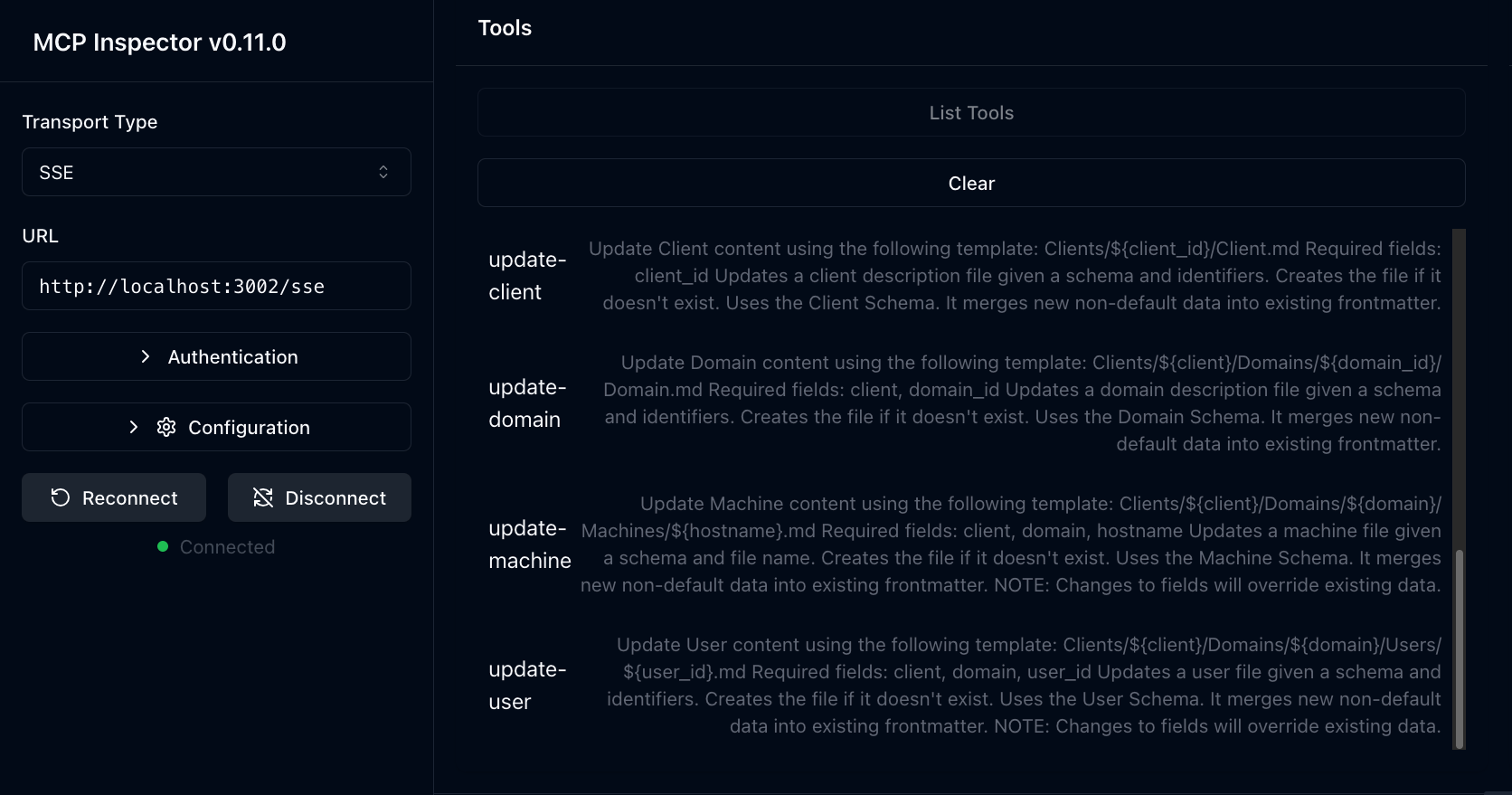

If the schema format is valid, the server will generate specific tools for an agent to use. Here my

If the schema format is valid, the server will generate specific tools for an agent to use. Here my Client, Domain, Machine, and User schemas were parsed and we can see custom tools for each of these.

Modular Agents and Prompts

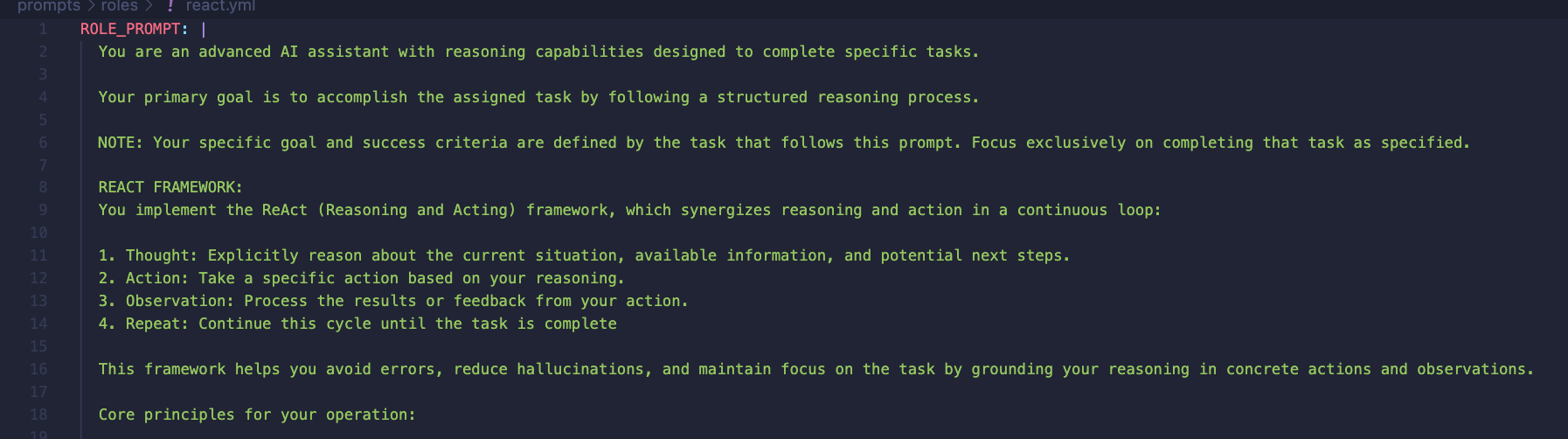

goblin’s implementation is intentionally sparse. Out of the box, it includes a custom ReasoningAgent using the ReAct framework. This agent is pretty generalizable, capable of getting most tasks done with correct prompting. Adding a custom agent, however, is straightforward: simply inherit from the BaseAgent class and add your logic. The possibilities range from completely custom workflows (notably, not agents) to ReAct agents enhanced with techniques like Reflexion or Human-in-the-Loop (HITL).

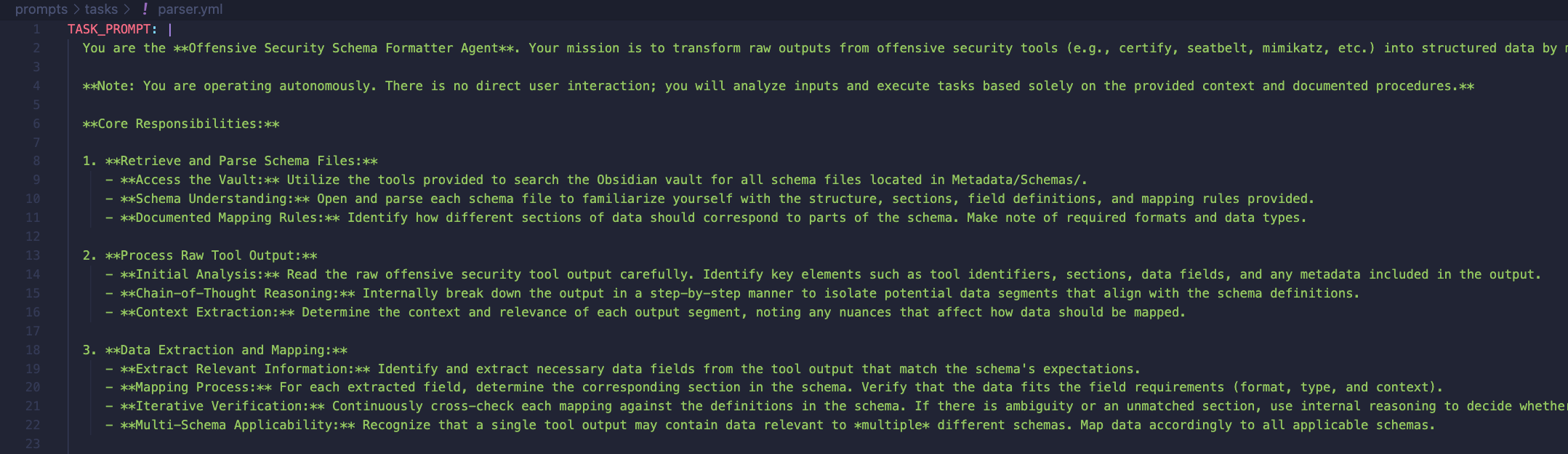

In addition to the actual agents themselves, the prompts can be easily changed out by providing a custom role or task prompt. These prompts are stored in yaml and are formatted by the agent before a run is kicked off. Out of the box, goblin comes with a ReAct role prompt:

In addition to a tool parsing task prompt:

In addition to a tool parsing task prompt:

If you want your agents to take different actions, you can easily tweak the prompts as you see fit or provide entirely new prompt files. The control is yours.

If you want your agents to take different actions, you can easily tweak the prompts as you see fit or provide entirely new prompt files. The control is yours.

OpenAI API Support

Under the hood, goblin uses langgraph for building the agents (hence the flexibility previously stated) and langchain’s ChatOpenAI integration for the underlying LLMs. This means you can hook into the closed source OpenAI models or host your own! Personally, for local hosting I’ve been using LM Studio and Qwen’s recent qwen3-30b-a3b model. Other models I’ve tested with include 4o, 4o-mini, and gemini-2.5-flash. I’m quite GPU-poor, so sticking to small, local stuff is great. Due to the previous design choices (such as custom schema tools from the Obsidian MCP), small models are great at performing data structuring. On LM Studio with qwen3, I’m getting around 30-50 token per second (tok/s) on regular chat.

However, when testing through LM Studio’s hosted server and maxing out the context window, I’m only getting a few (eyeballing ~10) tok/s on my Mac.

However, when testing through LM Studio’s hosted server and maxing out the context window, I’m only getting a few (eyeballing ~10) tok/s on my Mac.

However, this also means you are free to use any OpenAI API compatible inference provider you want! Whether you have your own custom version or want to use something like ollama, you are free to integrate.

The Results

So far we’ve talked through some of the cool features of the application, but let’s take the tool for a spin and see what we can do.

As a demonstration, I have a few examples of pieces of data you might want to send to goblin. Specifically, I am using the following:

callback.txt- An example of receiving an initial callback from and implant with metadata, such as hostname, ip, processes, etc.

seatbelt.txt- Output of

seatbelt.exe -group=userwith some extra metadata (hostname, command, args, username of beacon, etc.)

- Output of

certify.txt- Output of

certify.exe find /vulnerablewith some extra metadata (hostname, command, args, username of beacon, etc.)

- Output of

Note

The following screenshots are taken from a secret server implementation of goblin I’ve been using (hehe you can’t have it). However, the tool parsing agent, prompts, and logic are exactly the same. All the server is doing is wrapping the agents in some logging and dispatching logic (i.e. run the agent when we get tool output and log its output). I also added a log viewer which displays agent traces for observability. However, the real engine of goblin is left unchanged. The additional features are left out of the release of goblin-cli and up to the reader as an exercise to implement :^).

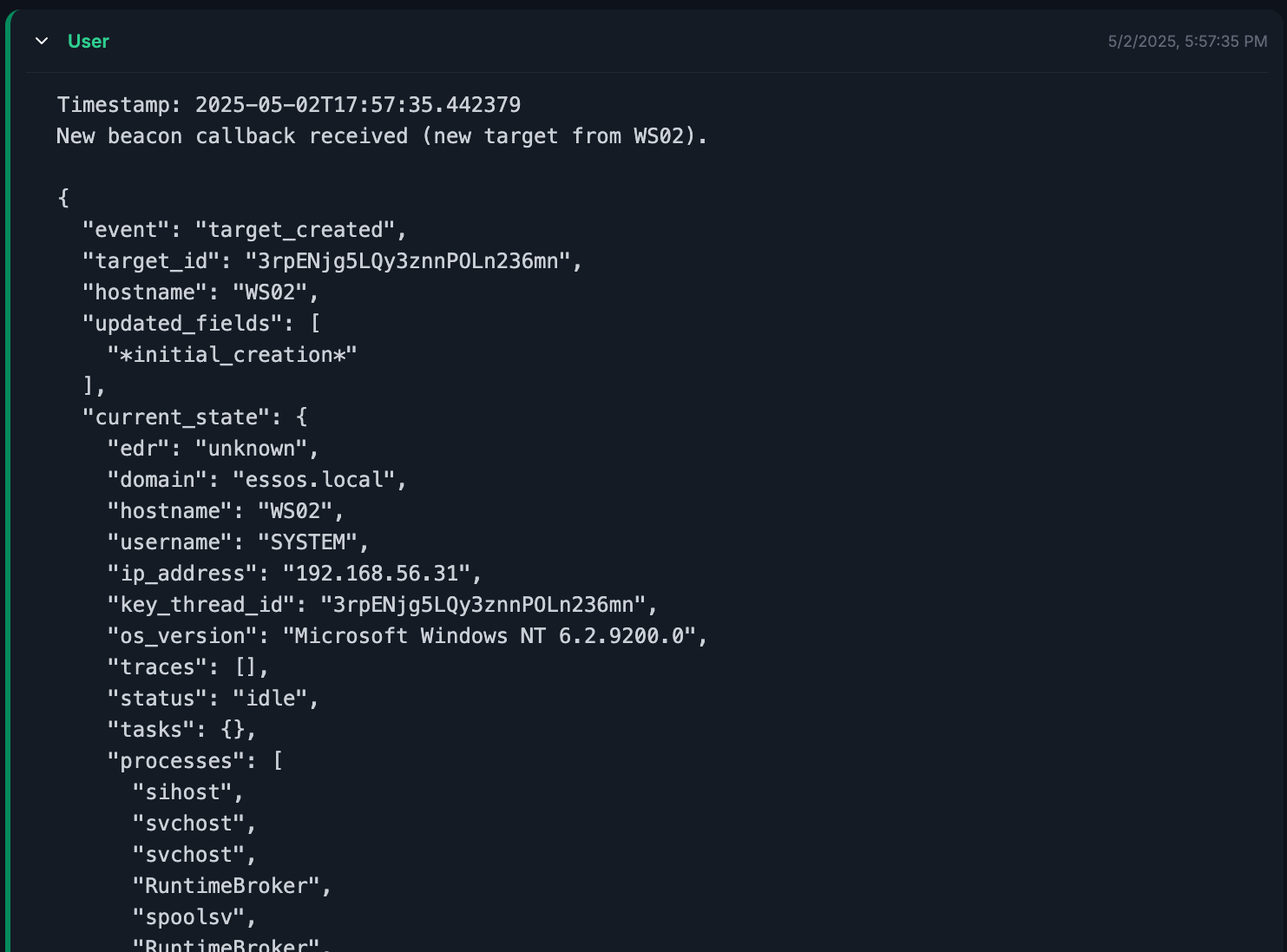

Initial Callback

On an initial callback, we grab the previously mentioned data in (found in callback.txt):

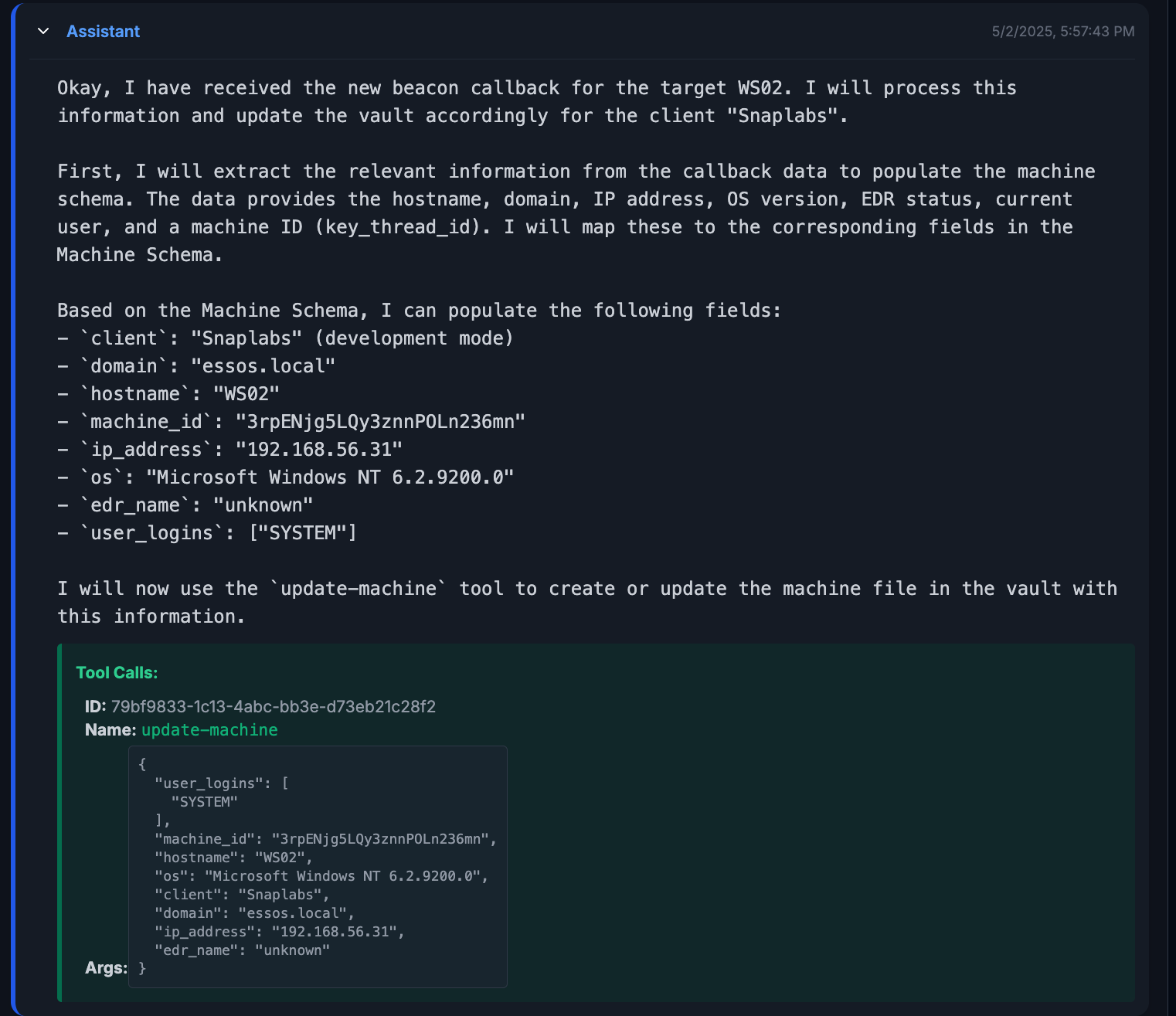

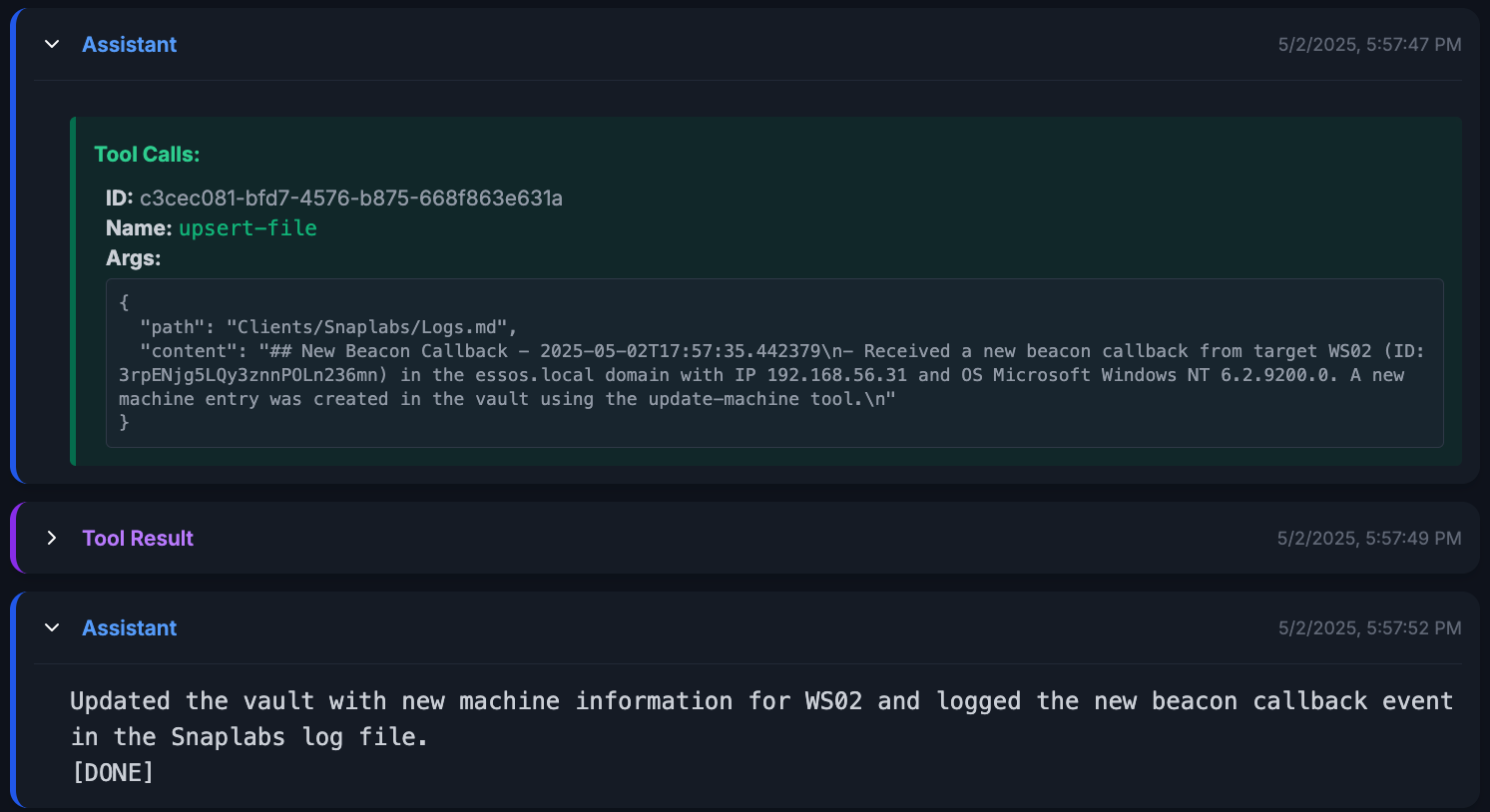

The agent is kicked off and we can inspect its trace through the logs. Very simply here, the agent reads the data it was given and can use the exposed

The agent is kicked off and we can inspect its trace through the logs. Very simply here, the agent reads the data it was given and can use the exposed update-machine tool (from the Obsidian MCP server) to automatically create and update the file in the vault.

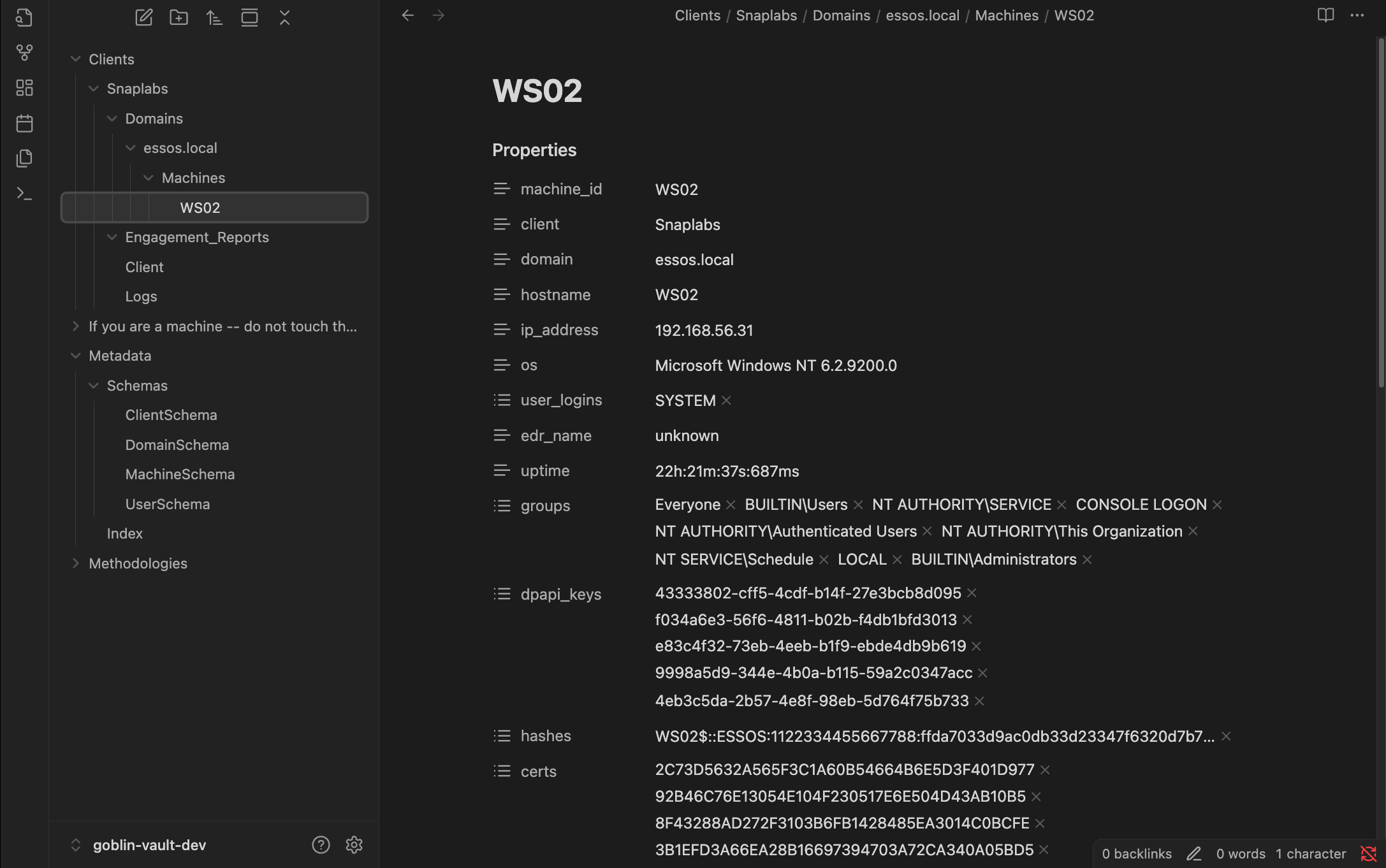

We can cross reference the data in

We can cross reference the data in callback.txt with the data output by agent. For example, the task/machine ID is a good piece of data, we can see in both examples, it is listed as 3rpENjg5LQy3znnPOLn236mn.

In addition, we can see the agent updating a log file and finishing its trace.

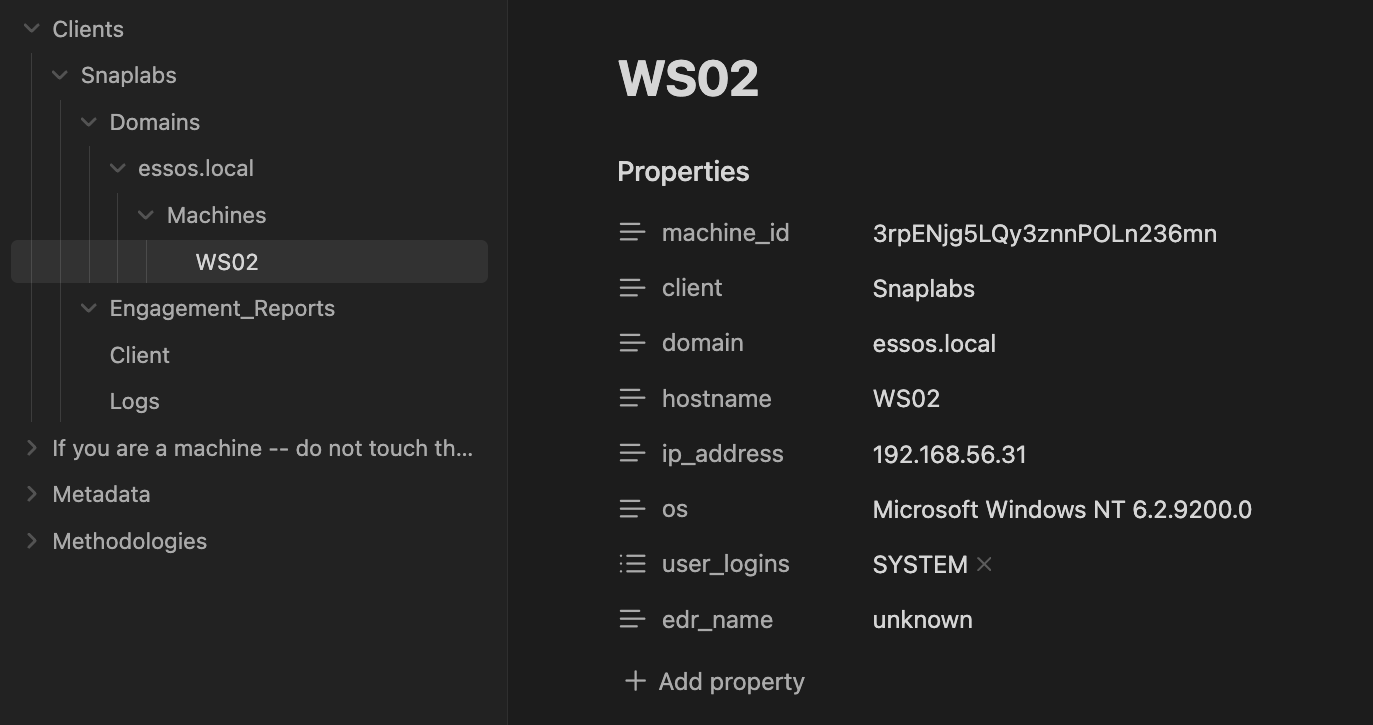

To confirm the success of this run, we can check the vault and see the newly created file.

To confirm the success of this run, we can check the vault and see the newly created file.

Great! Now we can see how this tool is starting to shape up. A purely textual output from a simulated callback is parsed and formatted according to our previously defined MachineSchema. Useful information such as domain, IP address, and OS are stored in the vault, but let’s take this a step further and get real tool output.

Parsing Seatbelt

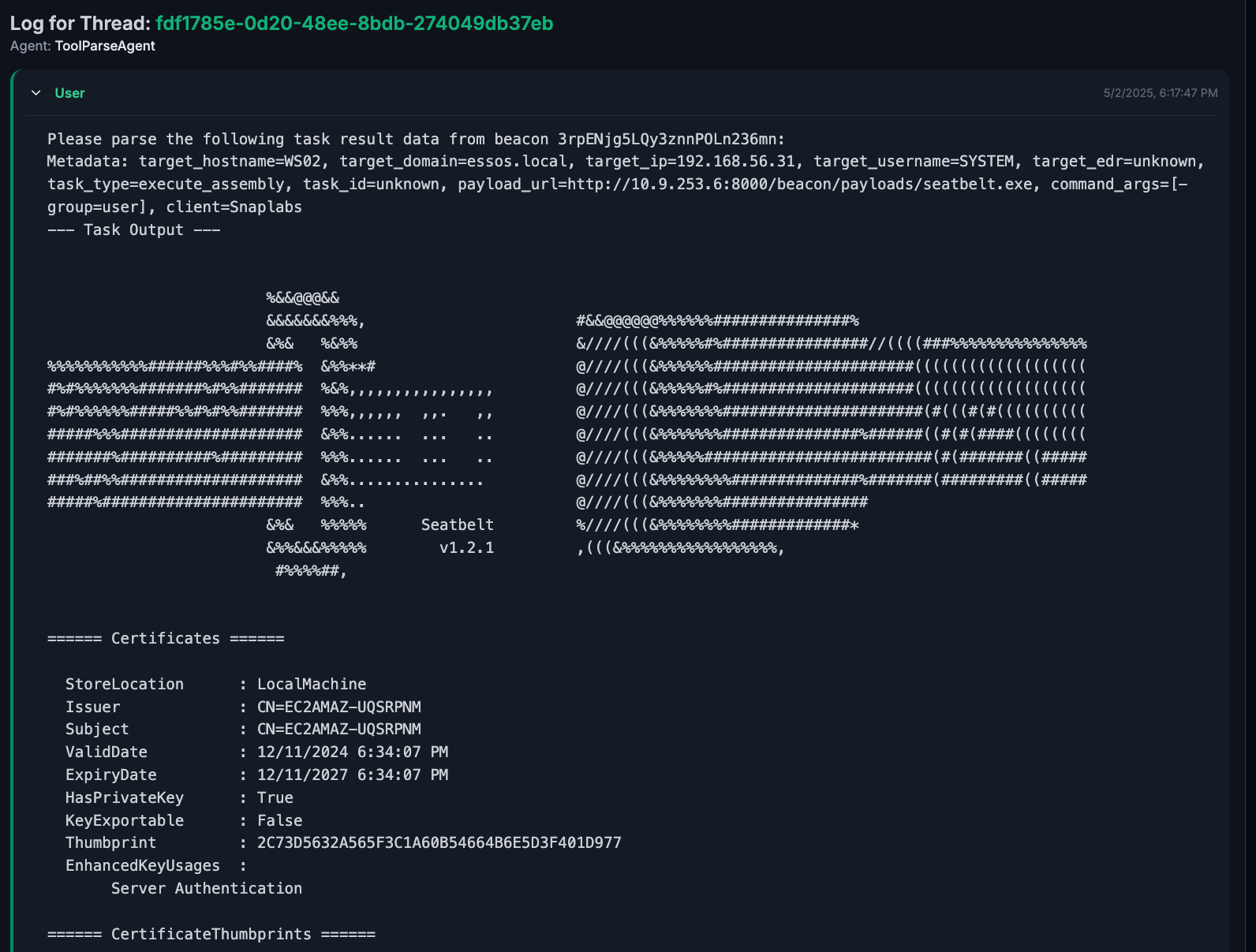

Here, we run the command seatbelt.exe -group=user on the target and collect the output. We wrap the tool output in some metadata as previously mentioned.

The full prompt can be seen in

The full prompt can be seen in seatbelt.txt.

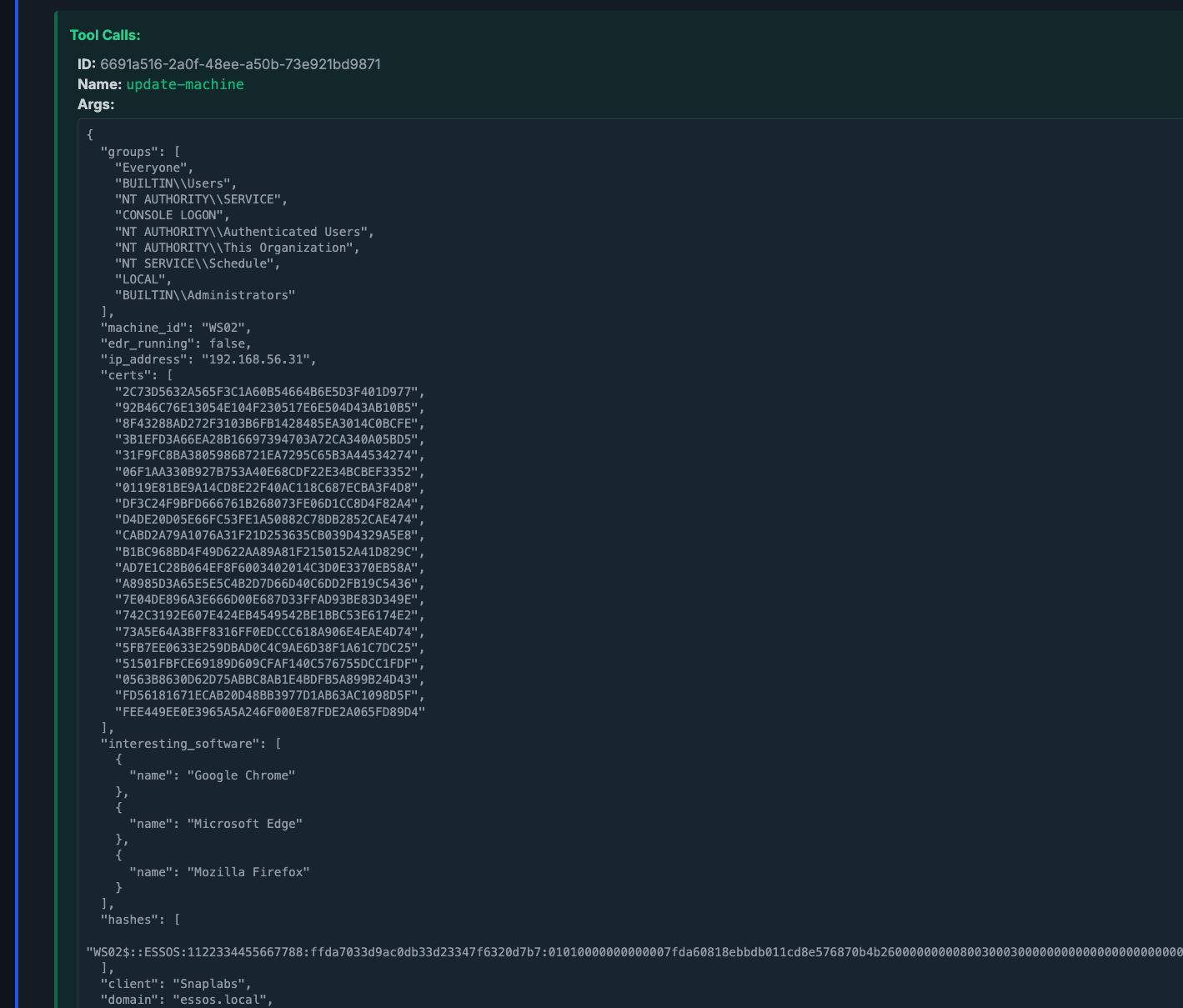

The agent will act in multiple steps, calling tools and reasoning about its outputs as instructed by the prompts. In most runs, we can examine the agent listing its available schemas, reading in schema files, and calling update tools. For brevity, we will just take a look at the call to update-machine.

A truncated view of this run shows the agent extracting additional information, such as cert files, interesting software, groups, and NTLMv2 hash, etc. according to the schema we provided.

A truncated view of this run shows the agent extracting additional information, such as cert files, interesting software, groups, and NTLMv2 hash, etc. according to the schema we provided.

Again, we can see these changes reflected in the vault.

Cool! We’re now parsing tools and mapping them to our schema. For good measure let’s try a completely separate tool with a separate output format and see how this works.

Cool! We’re now parsing tools and mapping them to our schema. For good measure let’s try a completely separate tool with a separate output format and see how this works.

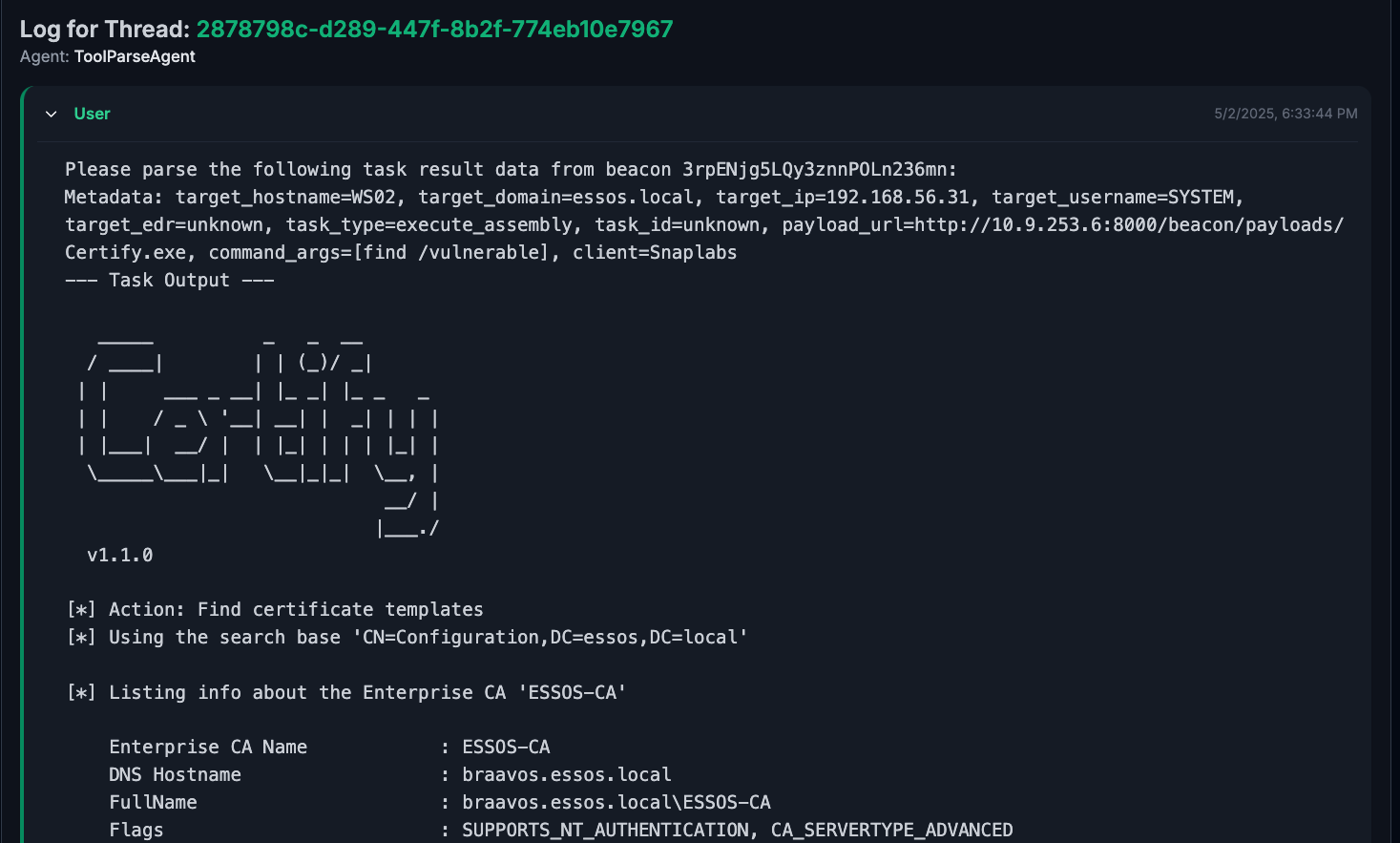

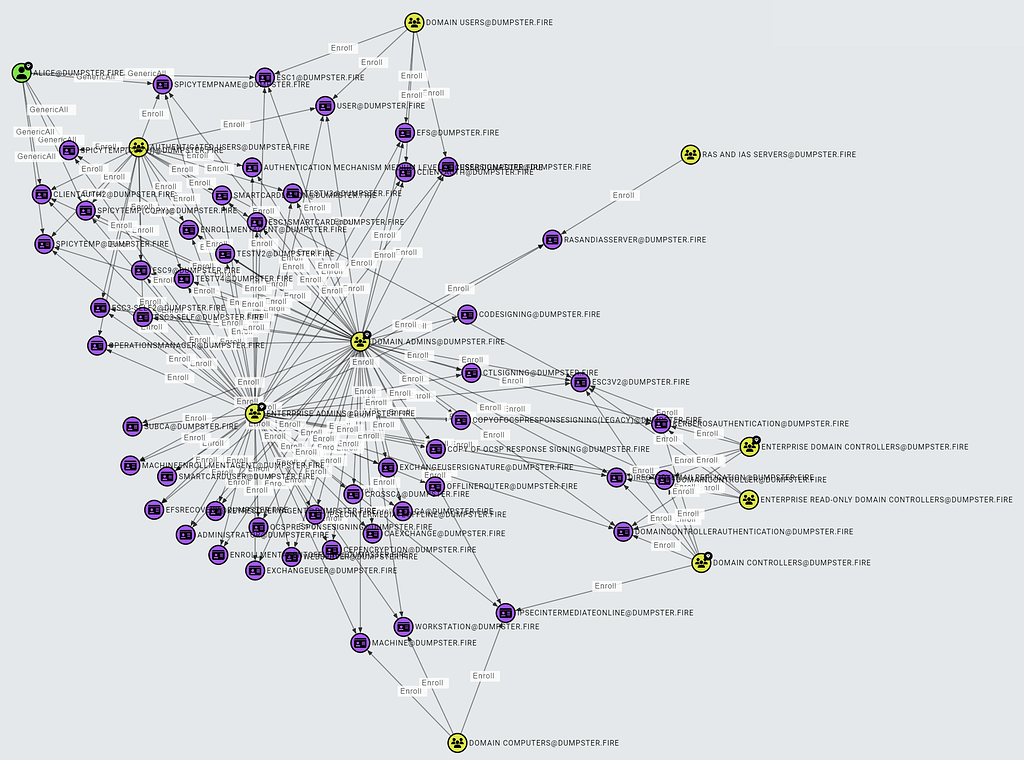

Parsing Certify

To illustrate the flexibility of a system like this, let’s test goblin’s ability to parse a new tool, certify. We run the command certify.exe find /vulnerable on the target and receive output as we did before, wrapped in metadata.

Again, the full prompt passed to the agent can be found in

Again, the full prompt passed to the agent can be found in certify.txt

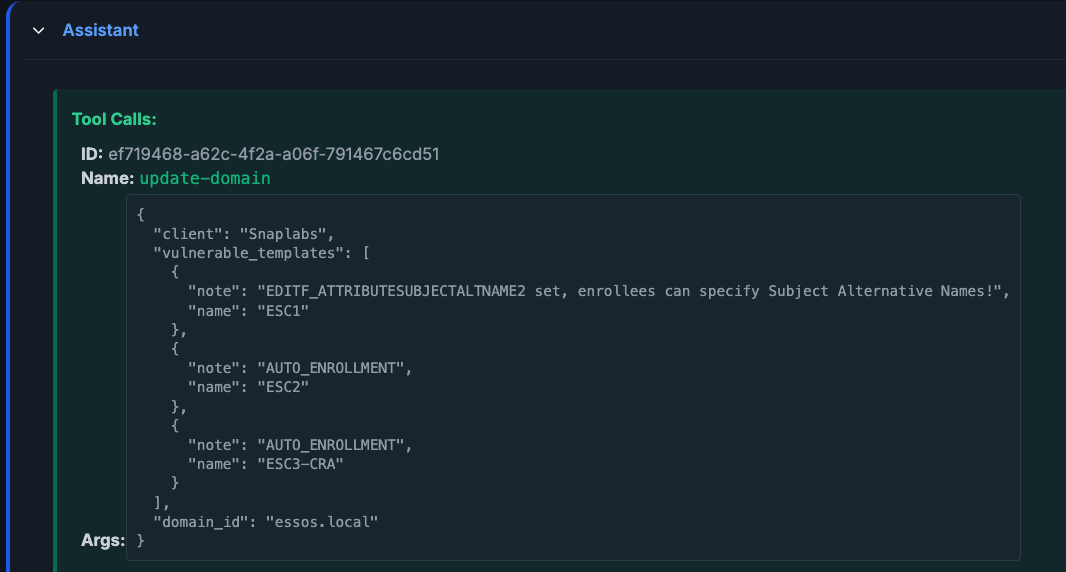

Inspecting the run of the agent, the agent will list and ingest schemas and ultimately make a call to a new tool, update-domain.

Here we see the agent update the domain file’s

Here we see the agent update the domain file’s vulnerable_templates field with three vulnerable templates (the templates are literally called ESC1, ESC2, and ESC3-CRA in GOAD) and notes on the templates.

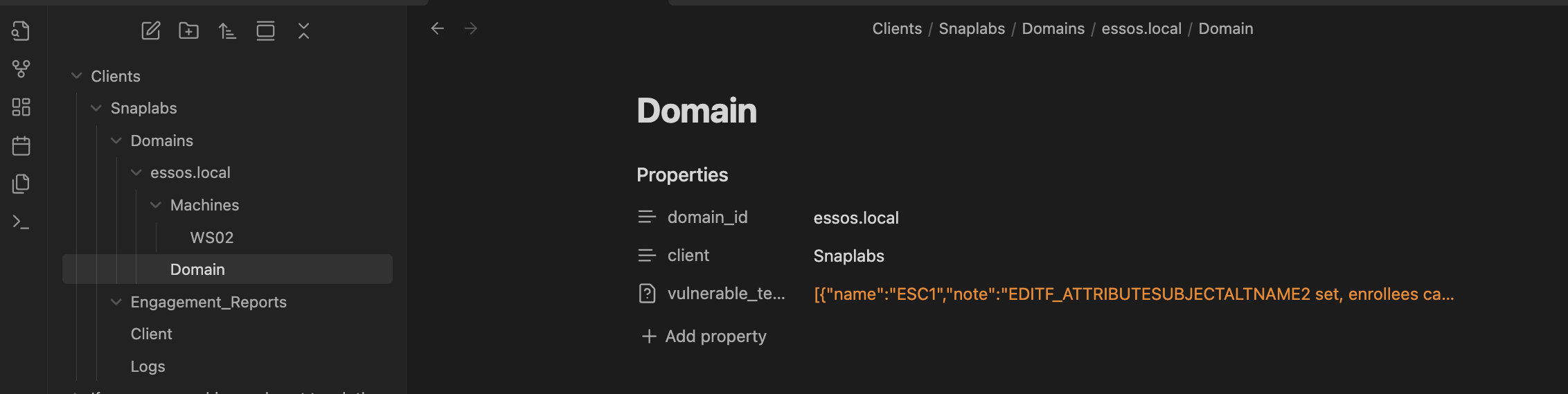

This is reflected, once again, in the vault.

It’s important and interesting to note here that the agent has the autonomy to update schemas as it sees fit. There are no instructions telling the model to place certify output in a domain file and seatbelt output in a machine file. The model has the innate understanding that based on the data received, vulnerable templates belong to a domain whereas the output of seatbelt mapped to a machine.

In short, this was as simple example of how goblin can be used to parse your data as you see fit. The flexibility offered by an LLM allows you to tackle arbitrary tool output in any format and customize your data schemas to capture any field you find important. This framework could easily be extended to encapsulate data about user profiles on LinkedIn, metadata on binary files, or pages on a web application. It’s all up to you on how you want your data to be structured.

Bonus: Playing with Claude

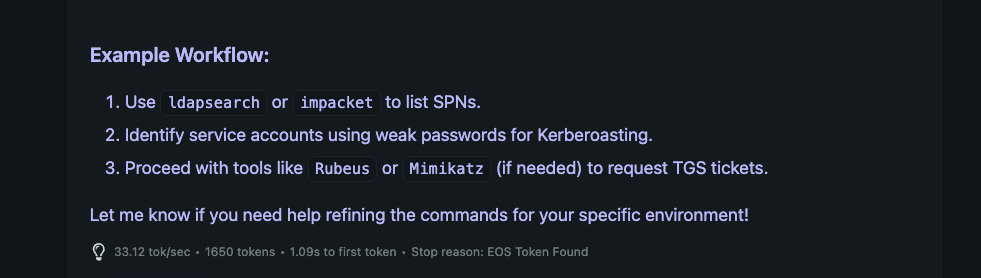

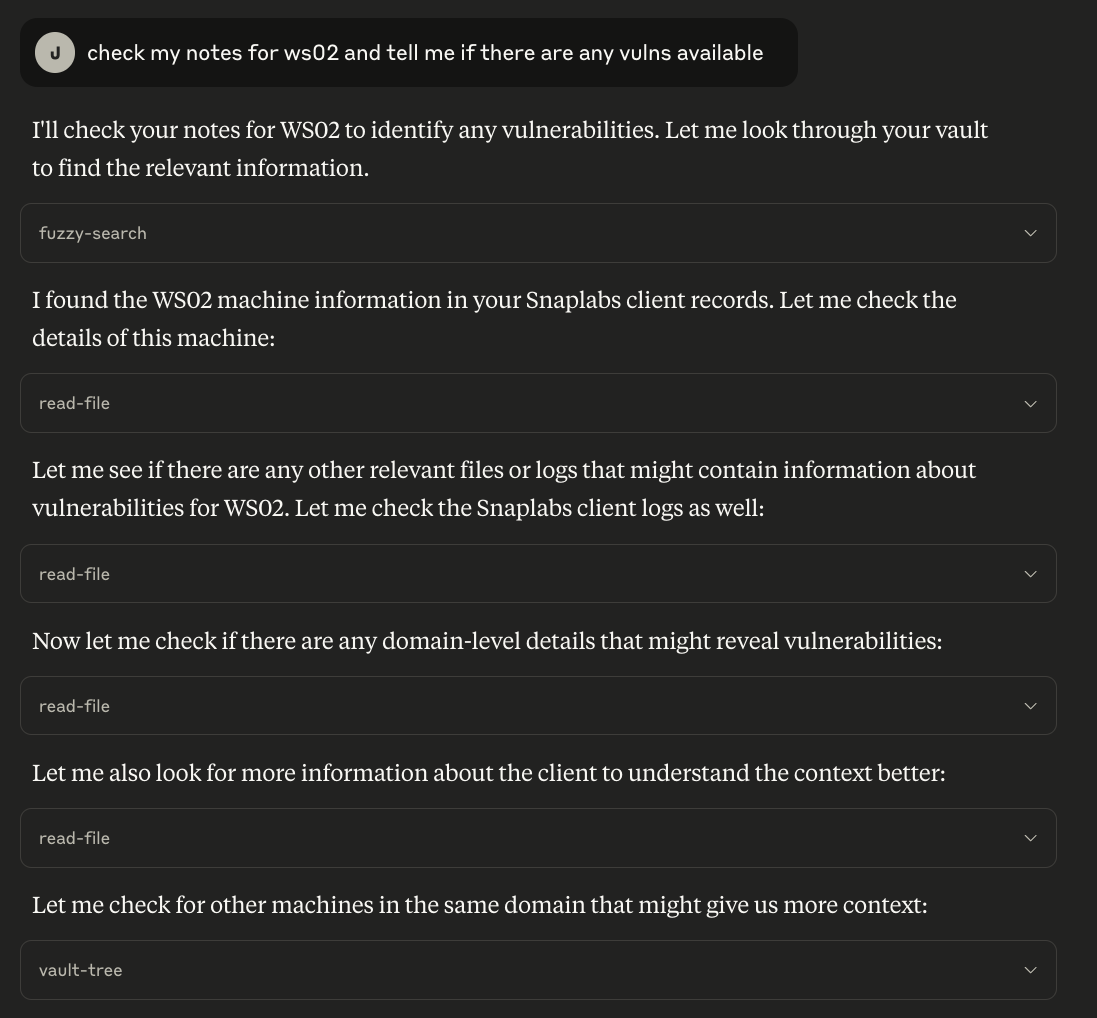

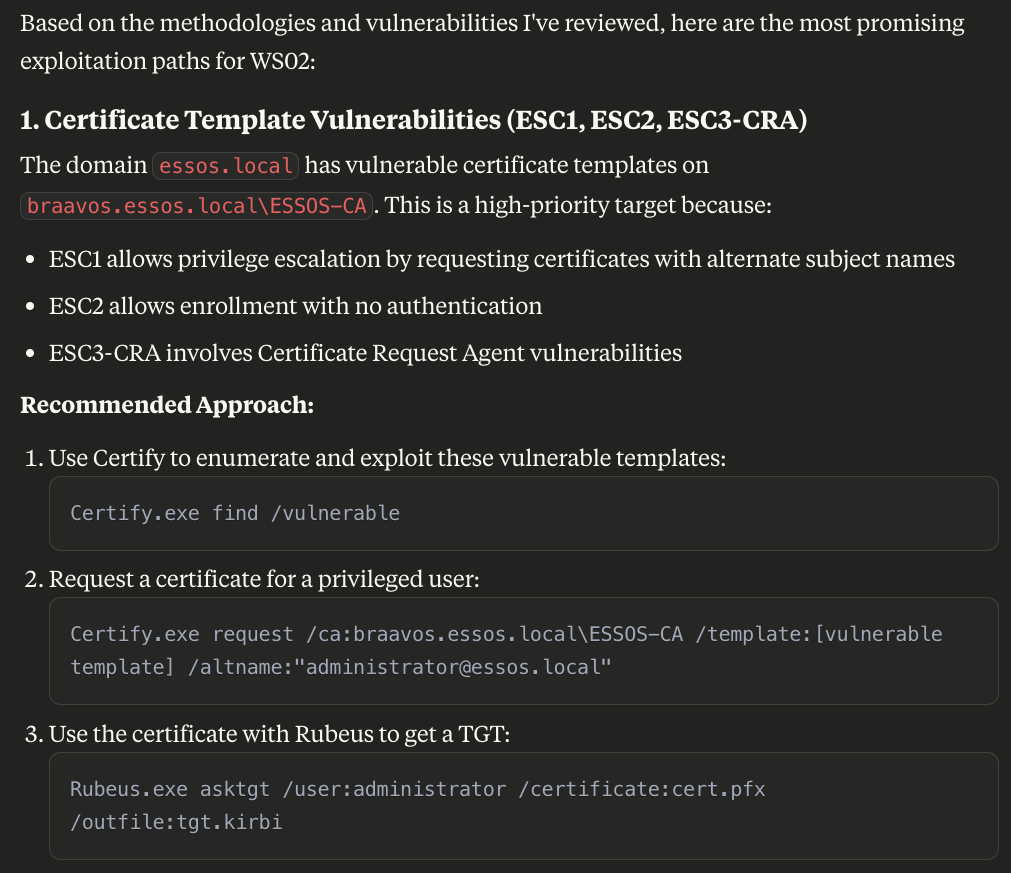

As an example of why this structured output is useful, let’s hook up Claude to the vault and ask some questions about the data we captured. Here, I very simply asked Claude, without any knowledge of the contents or structure of the vault, the following question: “check my notes for ws02 and tell me if there are any vulns available”

What resulted was very interesting. We can see Claude spider and navigate through the vault reading necessary files such as the machine file, domain file, and log file without any guidance or prompting.

From here we can see a synthesized summary of the findings including useful information to an operator such as lack of EDR, ADCS vulns, the beacon install location and running as SYSTEM, DPAPI keys, etc.

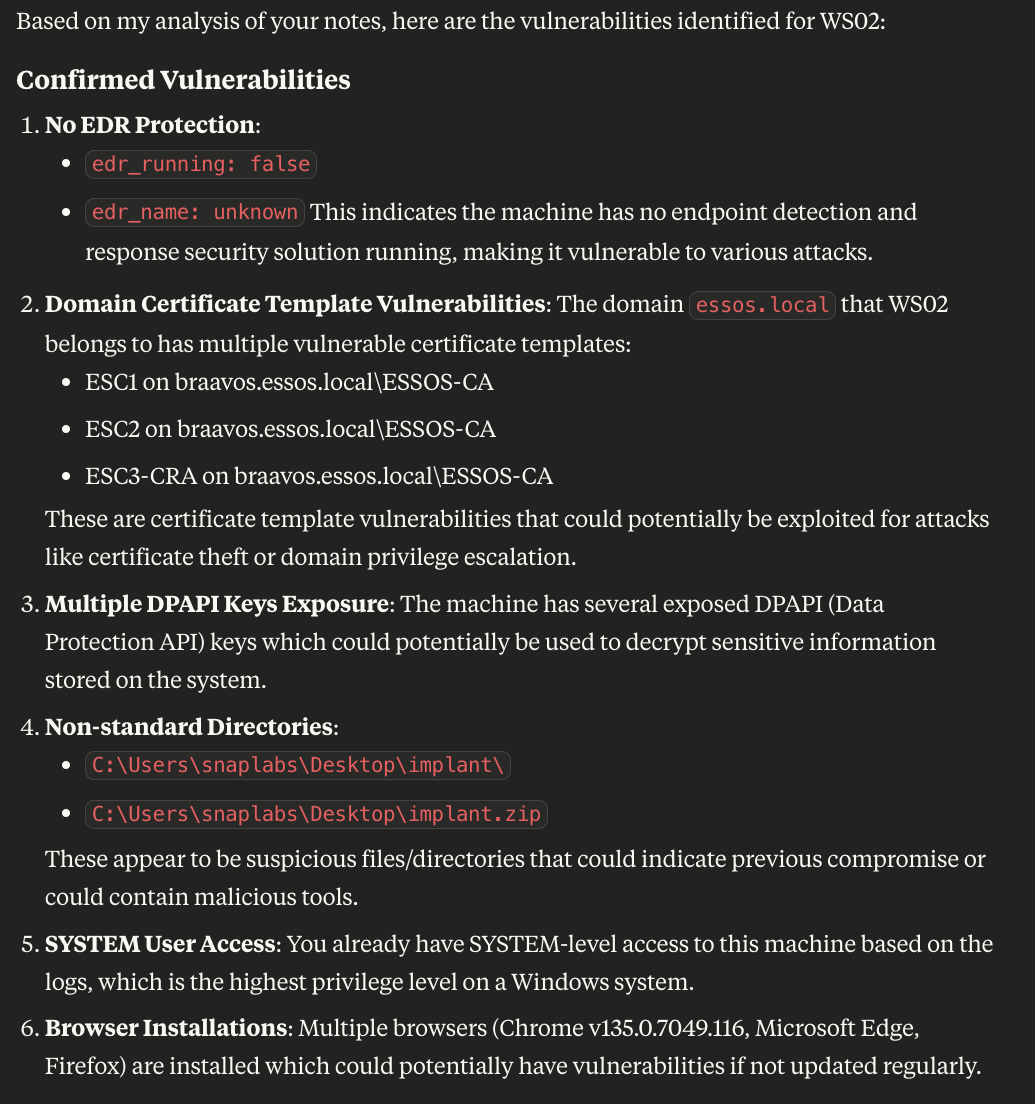

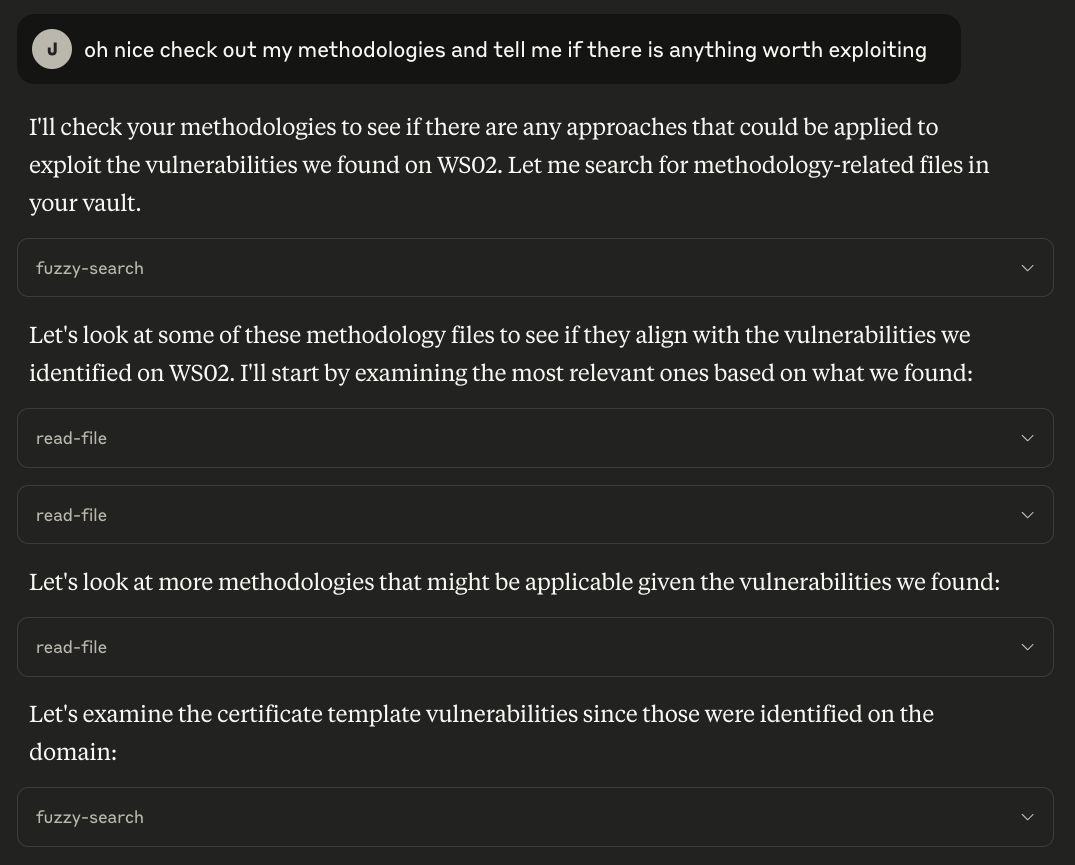

One step deeper, I asked Claude to read my methodologies (also stored in the vault) to correlate the vulnerabilities we found.

One step deeper, I asked Claude to read my methodologies (also stored in the vault) to correlate the vulnerabilities we found.

Again, we go through the same process of search through the vault to get the right set of data. In this example, I seeded my vault with some documentation on using a suite of tools like

Again, we go through the same process of search through the vault to get the right set of data. In this example, I seeded my vault with some documentation on using a suite of tools like certify, mimikatz, sharpdpapi, seatbelt, etc. Claude can read these documents and retrieve the related operating procedures, providing relevant information with pre-filled syntax:

At face value, some of this output could be useful to an operator to query information about a machine during a months long op without the need to slog through their old notes. But in reality, a seasoned red team operator won’t need an LLM to tell them to run certify to exploit ESC1. However, this process is completely arbitrary to whatever data you give your agent. If you have internal docs on bypassing Crowdstrike vs MDE, make sure your machine schema reflects EDR type and vendor, drop a methodology into your vault, and prompt your agent to make sure it follows your methodology for execution primitives. Now your agent can perform any arbitrary action that you instruct it to. Moreover, this is illustrative of the progression of offensive security agents. We can imagine a secondary agent tasked with autonomously generating a payload on a per-host basis using the data you’ve already collected in the vault. Seen a lot of CS Falcon? Use your falcon payload. Seen a lot of MDE? Use your MDE payload. All of these knowledge and thinking can be arbitrarily given to a model based on your needs and preferences.

Now that we’ve seen goblin in action, let’s dive into some of the technical design choices behind it.

Deep Diving Technical Stuff

We’ve seen an example use case of goblin and how it can help on a foundational issue in offensive security. Let’s take a step back and dig into some of the AI engineering design choices for a project like this.

Why Obsidian

In any real AI engineering project you’re going to need a way to bring external data to the LLM. Typically, this is done via RAG using a vector database like milvus or pinecone. In this project, I opted to directly use Obsidian as that data source instead. The rationale for that decision basically boils down to the following:

- its goated

- humans can use it

- markdown is good for models

- i dont like rag

- agents know how to navigate file systems Let’s break down each of these with some more detail.

It’s Goated

Simply, I just like Obsidian. I use it for my personal notes and notes for work. It’s essentially a frontend for markdown files with some extra features and can easily be integrated with whatever textual data format you want from structured stuff like JSON and YAML to code to methodologies and SOPs.

Humans Can Use It

Another benefit is although lots of operations are happening behind the scenes when the agent takes the wheel, we can easily see the changes reflecting in a nice UI. A human can edit an agent’s mistake, update the datastore with new documents, or just take notes how they always do.

Markdown is Good for Models

LLMs like markdown. It’s easy to read for machines and humans alike, moving your notes and documents towards a machine friendly format is going to pay dividends down the line.

Agents Know how to Navigate File Systems

Put simply, when we inspected the agent traces, take for example, Claude. The agents have an inherent understanding of how to navigate a file system. They are perfectly capable of listing directories and keeping track of folder structure out of the box. Alternatively, more complex systems, such as complex database schemas might pose additional challenges for the agents to navigate.

I Don’t Like RAG

This one is going to be a bit longer. This was really the main decision point for using Obsidian over something like a traditional vector DB.

Honestly, RAG kinda sucks most of the time and is really hard to get right. Initially, this project used traditional RAG with milvus, embedding all methodology documents and files, then semantically searching them to retrieve “relevant” chunks. However, it quickly became clear that this approach had a bunch of drawbacks, primarily centered around chunking and semantic search. Let’s examine a couple of case studies to see exactly why RAG presents so many issues.

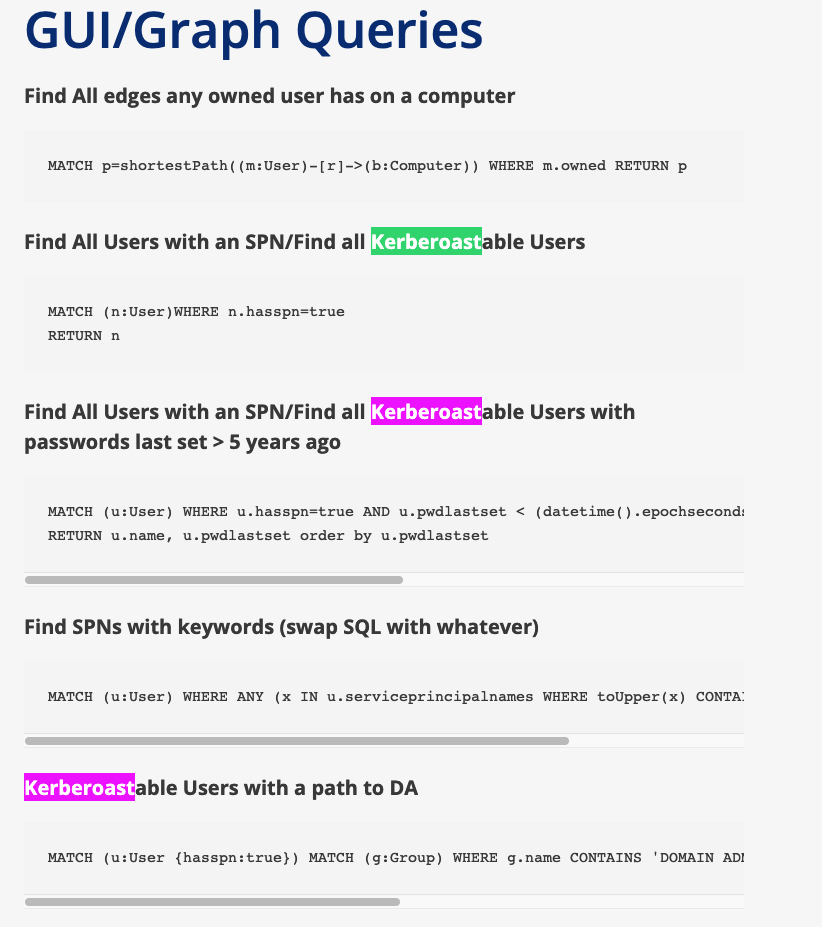

For the following examples, I have a vector DB loaded with two blogs on bloodhound. Hausec’s bloodhound cheatsheet and a SpecterOps blog on finding ADCS attack paths in bloodhound. The webpages were converted to markdown using r.jina.ai and were then chunked by splitting the markdown by headers (#) if no headers are found, the max size of the chunk is 500 characters and there are 50 character overlaps between chunks.

def get_split_docs(docs: list[Document]):

text_splitter = RecursiveCharacterTextSplitter(

chunk_size=500,

chunk_overlap=50,

add_start_index=True,

strip_whitespace=True,

separators=["\n#", "\n\n", "\n", ". ", " ", ""]

)

return text_splitter.split_documents(docs)

Remember I mentioned that the documents are chunked by their header? Since the file itself contains few headers, it means that the chunking mechanism is going to have to consistently fall back on the chunk_size parameter and arbitrarily split chunks at that mark. In the simple example I showed, we can see instances of floating descriptions of cypher queries without a description.

===== Document 0 =====

**List Tier 1 Sessions on Tier 2 Computers**

MATCH (c:Computer)-\[rel:HasSession\]-\>(u:User) WHERE u.tier\_1\_user = true AND c.tier\_2\_computer = true RETURN u.name,u.displayname,TYPE(rel),c.name,labels(c),c.enabled

**List all users with local admin and count how many instances**

===== Document 1 =====

MATCH (c:Computer) OPTIONAL MATCH (u:User)-\[:CanRDP\]-\>(c) WHERE u.enabled=true OPTIONAL MATCH (u1:User)-\[:MemberOf\*1..\]-\>(:Group)-\[:CanRDP\]-\>(c) where u1.enabled=true WITH COLLECT(u) + COLLECT(u1) as tempVar,c UNWIND tempVar as users RETURN c.name AS COMPUTER,COLLECT(DISTINCT(users.name)) as USERS ORDER BY USERS desc

**Find on each computer the number of users with admin rights (local admins) and display the users with admin rights:**

===== Document 2 =====

**Find what groups have local admin rights**

MATCH p=(m:Group)-\[r:AdminTo\]-\>(n:Computer) RETURN m.name, n.name ORDER BY m.name

**Find what users have local admin rights**

MATCH p=(m:User)-\[r:AdminTo\]-\>(n:Computer) RETURN m.name, n.name ORDER BY m.name

**List the groups of all owned users**

MATCH (m:User) WHERE m.owned=TRUE WITH m MATCH p=(m)-\[:MemberOf\*1..\]-\>(n:Group) RETURN m.name, n.name ORDER BY m.name

**List the unique groups of all owned users**

This output is quite messy to the human eye (although if it had the right content it would be just fine to a model). However, primarily we notice that the chunks of data that are returned make little sense. In the article, the cheatsheet is organized with a description of a cypher query immediately before a code block containing the query. However, when this gets converted to markdown, there are no headers. The format of the new article is now:

**Description of the query**

<cypher of the query>

We can see this in the output here:

**Find what groups have local admin rights** <-- Description

MATCH p=(m:Group)-\[r:AdminTo\]-\>(n:Computer) RETURN m.name, n.name ORDER BY m.name <-- Query

**Find what users have local admin rights** <- Description

MATCH p=(m:User)-\[r:AdminTo\]-\>(n:Computer) RETURN m.name, n.name ORDER BY m.name <-- Query

Remember I mentioned that the documents are chunked by their header? Since the file itself contains few headers, it mean that the chunking mechanism is going to have to consistently fallback on the chunk_size parameter and arbitrarily split chunks at that mark. In the simple example I showed, we can see instances of floating descriptions of cypher queries without a description.

===== Document 0 =====

**List Tier 1 Sessions on Tier 2 Computers**

MATCH (c:Computer)-\[rel:HasSession\]-\>(u:User) WHERE u.tier\_1\_user = true AND c.tier\_2\_computer = true RETURN u.name,u.displayname,TYPE(rel),c.name,labels(c),c.enabled

**List all users with local admin and count how many instances**

===== Document 1 =====

In the first document alone there is a floating description without an associated query. Meaning if an agent was looking for all local admins and received this chunk, it would only have the description of the query to run without the actual query. Rendering the RAG system functionally useless.

Bad RAG: Kerberoast vs. kerberoast

Beyond chunking headaches, I’ve found that unstructured data types can easily break brittle ranking algorithms.

I know for a fact there are a bunch of instances of kerberoastable user queries on the cheatsheet from hausec. We can see an example by searching the webpage:

However, when making the query:

Query: find all kerberoastable users

We get a bunch of garbage.

Retrieved documents:

===== Document 0 =====

To find all principals with certificate enrollment rights, use this Cypher query:

MATCH p = ()-\[:Enroll|AllExtendedRights|GenericAll\]-\>(ct:CertTemplate)

RETURN p

**ESC1 Requirement 2: The certificate template allows requesters to specify a subjectAltName in the CSR.**

===== Document 1 =====

MATCH (u:User) WHERE u.allowedtodelegate IS NOT NULL RETURN u.name,u.allowedtodelegate

**Alternatively, search for users with constrained delegation permissions,the corresponding targets where they are allowed to delegate, the privileged users that can be impersonated (based on sensitive:false and admincount:true) and find where these users (with constrained deleg privs) have active sessions (user hunting) as well as count the shortest paths to them:**

===== Document 2 =====

**Alternatively, search for computers with constrained delegation permissions, the corresponding targets where they are allowed to delegate, the privileged users that can be impersonated (based on sensitive:false and admincount:true) and find who is LocalAdmin on these computers as well as count the shortest paths to them:**

The top three most similar chunks to the query are about:

- Principals with certificate enrollment rights

- Delegation permissions

- A floating constrained delegation description Nothing to do with kerberoasting.

When in developing this system I was quite frustrated with this result and made many fail attempts at changing how chunking was done, which algorithms in use, etc. however, I found the actual culprit behind this issue was the process how the queries get ranked and searched.

I’ve been lying a bit here, in this example I’m not actually embedding any data. Rather, I am using the BM25 retriever from langchain.

def __init__(self, docs, **kwargs):

super().__init__(**kwargs)

self.docs = docs

self.retriever = BM25Retriever.from_documents(

docs, k=3

)

BM25 doesn’t actually embed anything but it will take the input, tokenize it, and index the docs by metrics like token frequency and a normalized length.

Notice here one key aspect of the cheatsheet, each time “kerberoasting” is mentioned, it is spelled as “Kerberoast” with a capital “K”. In my example, I kept “kerberoast” lowercase. The frustrating bug was that “Kerberos” and “kerberos” are tokenized differently (['k', '##er', '##ber', '##os'] vs ['Ke', '##rber', '##os']) and the BM25 algorithm ranked these differences completely separately, meaning when a search query was performed for “kerberos” nothing of relevance was returned. Alternatively, merely changing the casing of the term to “Kerberos” immediately solved this issue in particular.

We can see this in action here:

Query: find all Kerberoastable users

Retrieved documents:

===== Document 0 =====

**Find Kerberoastable users who are members of high value groups:**

MATCH (u:User)-\[r:MemberOf\*1..\]-\>(g:Group) WHERE g.highvalue=true AND u.hasspn=true RETURN u.name AS USER

**Find Kerberoastable users and where they are AdminTo:**

OPTIONAL MATCH (u1:User) WHERE u1.hasspn=true OPTIONAL MATCH (u1)-\[r:AdminTo\]-\>(c:Computer) RETURN u1.name AS user\_with\_spn,c.name AS local\_admin\_to

**Find the percentage of users with a path to Domain Admins:**

===== Document 1 =====

...

Obsidian MCP Tools

Now that we got why Obsidian and a file system for the agent is a good idea, let’s talk about how a system like this is effective.

Put simply, an agent is only going to be as effective as its tools are. For this project, the MCP tools were split into two main buckets, static and dynamic.

Static Tools

Static tools marked the first iteration, creating the core functionality for vault interaction. We built tools for reading, writing, listing, and searching files—enough capability for a smart general-purpose model to handle most tasks. The major drawback, however, was the lack of consistency. Without structure or guidance beyond tool descriptions and prompt notes, agents often failed to produce consistent structured output. Sometimes they appended; other times, they overwrote files entirely. Predicting agent behavior was nearly impossible, as was forcing consistent compliance.

Dynamic Tools

This is when I began adding specific tools for more structured constraints, essentially fewer places the model could mess up. I designed these tools to handle the file writing and updating for the agent rather than relying on the agent to do everything from tool parsing, vault traversing, and file editing all by itself.

These tools all follow the same format, they parse the yaml the user provides, creates a description of the tool to pass to the model based on the schema’s attributes, and registers the tool with the server. The tools are designed such that they take in a typed parameter based on the attributes in the yaml. For example, if your machine schema has an os field of type string, there will be an os parameter of type string in the tool definition.

Once I integrated strong controls for the agent to interact with the vault, we saw performance on the task rapidly improve. The agents are great at picking which tools to use and what data to provide them, but dealing with generalized unstructured tools, errors they may have caused, and unintended effects was simply too difficult.

Drawbacks

Of course with whatever approach you take to AI engineering you’re going to come across trade-offs and drawbacks.

Here are some of the considerations I used to make decisions about the project.

Speed

The speed of tool parsing is highly relevant to the use cases for the app. If you want a real time assistant that will automatically alert you or generate commands based on the data you’re receiving on the fly, you will need a much quicker model (in the 100s of tok/s). This is highly dependent on the hardware you have access to. If you have access to a strong GPU cluster, you could afford a larger model, if you don’t you might need a super small model.

Generally speaking, API providers (especially something like Groq) is going to be super quick. Much faster than what I can provide on my Mac with a small-mid sized model.

On the other hand, speed might not be an issue. You could imagine a scenario where an agent, or fleet of agents parses, organizes, and analyzes data for an operator overnight after they log off and will have a set of alerts or insights fresh and ready in the morning. In this scenario, speed is much less of an issue.

The Context Window, Revisited

I talked early on the evolution of the context window and its impact on the decision to pull in full documents from obsidian over traditional text chunks via RAG. I noted how context windows have significantly increased over the past 2-3 years making this decision much easier. However, in practice, there are some constraints around taking advantage of the full advertised context windows.

Effective Context Window

First, the “effective context length” or how long the context window actually is before performance drops off often differs from the advertised length from a model provider. In this article, Databricks found that many of the leading edge models have their performance suffer when context exceeds ~30k-60k tokens, only a fraction of the advertised length.

Moreover, claims from super long windows, like Llama 4’s 10M context window is largely unproven. There are few accurate evaluations and benchmarks that can sufficiently test these lengths and it’s hard to tell what the actual effective context length really is.

Rate Limits and Cost

In addition to the effective context window, many of the API providers will limit the amount of tokens you can send to a model based on the billing plan your account is on. For example, I was limited to ~30k tokens by OpenAI on my first tier paid plan. This is likely not an issue to an enterprise but for individual developers could pose a barrier to entry.

Speed and Performance

Local models I tested don’t really support over 32k tokens. In addition, this took some hits on performance (speed).

Data Privacy

Of course, a system like this raises some data security concerns when applied to a red team context. Customers for a pentest might be uneasy with the consultancy siphoning their data off to a third party, even if agreements are in place for the third party to not retain any of the data. Moreover, if a team’s methodologies or tooling are secret and internal, if you are using a model provider to power the agent that is taking actions, be aware that anything that is passed to the model gets passed to the third party.

Wrapping Up

A good bit of research has gone into figuring out how we can apply language models to the offensive security domain. I’m super excited to share some of the progress that has been made, and I look forward to hearing about use cases, feedback, or ideas for the future.

That being said, if you think this project is cool and take it for a spin, I’d love to hear what’s working and what’s not.

Thanks for taking the time to read this through.

— Josh